While many were paying attention to the ISIS attack in Belgium, there was one bit of foreign policy news that went relatively unnoticed last week: the Russian tax regime, specifically with regards to petroleum. While the Russian government decided to reduce its oil export duty by 9 percent in December, it looks like the Russian government is eyeing the oil industry once again. The problem is that the proposed budget for the Russian government, which is supposed to be amended in April, assumes oil prices at $50/barrel when they're still stuck at around $35/barrel. Russia's budget deficit for 2016 will be at 3 percent if oil prices remain at $40/barrel. The New York Times (NYT) actually put out a very interesting article on the topic last week, which is worth the read. But let's ask ourselves: why should the world as a whole be concerned about Russia's budgetary shortfall?

Since oil prices started to drop in 2014, Russia has been in a recession. The International Monetary Fund (IMF) predicts that Russia won't pull out of the recession until 2017. While such factors as economic sanctions, stalled structural reforms, and weak investment that contributed to Russia's economic downfall, declining oil prices are particularly significant here because oil revenues account for about half of Russia's federal budget (IMF, Article IV Consultation, p. 10). Oil prices notwithstanding, the Russian economy isn't doing so well these days. Although Moody's rated Russia's Ba1 credit as stable back in December, Moody's is now looking to downgrade Russia further into junk bond territory. Fitch is presently the only major credit rating entity that keeps Russia out of junk bond territory, which doesn't bode so well for Russia.

Per the NYT article, the Russian government is looking to tax the funds that oil companies use to invest in future oil production. This source of money was once considered sacrosanct, but desperate times call for desperate measures. Russian oil companies are currently making about $3 profit on a $35 barrel of oil, which is less than 10 percent of a profit margin. The Russian Ministry of Energy leaked a study saying that Russian oil output could decrease by half of the current level by 2035. The Russian oil market is worrisome, and doesn't show immediate signs of amelioration. If the Russian government gives into temptation, it would only be shooting itself in the foot. Much of Russia's current oil deposits are running out, and Russia cannot automatically rely on an increase in oil prices since, according to the U.S. Energy Information Administration, an excess of global oil supply will remain through much of 2017. In order for Russia to continue with its oil glut, it would need to use more unconventional methods of offshoring and shale projects. The problem with that is that heavy capital investment is required for such operations to take place. If the Russian government manages to undermine its oil production with onerously high taxes, it will only accelerate Russia's financial woes.

Let this once again be a lesson that you can't tax yourself into prosperity. If anything, it looks like Russia will tax itself into financial ruin. The Left-leaning Vox plays out some of the more drastic, but nevertheless plausible scenarios: decreased military spending, cuts in social services, or even make up for the weakness by overcompensating via a more proactive military. Assuming the Russian government takes the expedient option, we will see Russia slip into an increased irrelevance in the greater world politik. The Russian people will certainly suffer as a result, but if we are to glean one thing from these trends, it's that Russia is declining into a territory that will put it far out of reach from its heyday of the Cold War era.

The political and religious musings of a Right-leaning, libertarian, formerly Orthodox Jew who emphasizes rationalism, pragmatism, common sense, and free, open-minded thought.

Thursday, March 31, 2016

Monday, March 28, 2016

No, There Is Not Israeli Apartheid in the West Bank

For those who are pro-Israel and happen to be on a college campus this week, you'll notice the ever-so annoying Israel Apartheid Week taking place. This is a time where "pro-Palestinian" student activists and the maliciously slanderous BDS movement dedicate an entire week spreading lies about Israel being an apartheid state. I went over that claim four years ago during Israel Apartheid Week, and I found that apartheid does not take place in the land of Israel. Yes, there are acts of unfairness and discrimination in the land of Israel. No country is perfect. If we were to apply the mythical standards to other countries that anti-Israel activists apply to Israel, we would find that under those ridiculously high standards, every country could be labeled as an apartheid state because every country has disparities of the racial, socio-economic, and/or ethnic variety on some level.

However, anti-Israel activists have taken a different approach. The emphasis is less on what happens to Arabs in Israel proper, and more so as to what happens to Palestinians in West Bank and Gaza. Gaza is simpler to explain. The majority of Gazans elected the terrorist organization known as Hamas into power in 2007. Unsurprisingly, there have not been elections with the Palestinian Authority since then. Hamas is an anti-Semitic entity hellbent on wiping out Israel. There is no moral equivalency between Hamas and Israel. Hamas is fixated on the goal of Israel's annihilation, and aside from the Israelis who are victims of Hamas' attacks, the citizens within the Gaza Strip also suffer immensely from such totalitarian rule.

What about the West Bank? As Judge Richard Goldstone, the man infamous for the Goldstone Commission that inaccurately labeled Operation Cast Lead (Goldstone since redacted the report), put it in his New York Times op-ed regarding the slanderous claim of Israel being an apartheid state, "The situation in the West Bank is more complex." Not that I am fan of the United Nations, but the map below illustrates just how much of a mess the West Bank is. Per the Oslo Accords, Israel and the Palestinian government agreed to divide the West Bank into three areas: Area A, Area B, and Area C. While Area A accounts for a small percentage of the geographical area, it still accounts for the majority of the population in the West Bank. In Area A, the Palestinian Authority exercises full civil and security control. Under Area A, the Fatah government deprives its citizens of its most basic of liberties, as is illustrated by its Freedom House ranking, so it's not the issue of the Israeli government depriving Palestinians of basic rights, but rather largely on the Palestinian government itself. Area B consists of full Palestinian civil control and going Israeli-Palestinian security control. Area C, which accounts for the majority of the West Bank in terms of geographical area, is under full Israeli control for security, planning, and construction.

The problem that these seemingly arbitrary divisions cause for the citizens in the West Bank is that the checkpoints throughout limit travel within the West Bank. The checkpoints also came with a wall along the 1948 Armistice Line, which anti-Israel activists have derisively called the "apartheid wall." I think it's noteworthy to recall when the wall was erected in the first place. Since the Six-Day War of 1967, Israel was able to avoid building a security wall for over three decades. It wasn't until the Second Intifada of the early 2000's when terrorist attacks originating from the West Bank were so numerous and severe that it necessitated the creation of the security wall. The number of terrorist attacks dropped precipitously once the wall was erected, and has served as a legitimate barrier to largely prevent terrorist attacks from plaguing Israel. The Israeli Supreme Court has made rulings to minimize unreasonable burdens of the checkpoints and security wall (see here, here, and here), which is another example of Israeli accommodation to a complex situation. But's let's be mindful of the fact that West Bank Palestinians are not citizens of Israel, and the 92,000 Palestinians who do enter Israel to work thusly need permission to enter Israel proper. Let's also remember that the Palestinian government has been presented with multiple opportunities to create an independent state under a two-state solution, which would eliminate the need for an Israeli security presence, but the Palestinian government has rejected each opportunity.

The question the remains is whether such measures constitute as apartheid, which means we need to go back to the definition of apartheid. The Rome Statute of International Criminal Court of 1998 (Article 7, Section 2h) defines apartheid as "inhumane acts of a character similar to those referred to in paragraph 1, committed in the context of an institutionalized regime of systematic oppression and domination by one racial group over any other racial group or groups and committed with the intention of maintaining that regime." The United Nations General Assembly in 1973 defined it as "inhuman acts committed for the purpose of establishing and maintaining domination by one racial group of persons over any other racial group of persons and systematically oppressing them." Technically, the Palestinians aren't a race, which is one way this doesn't fall under the legal definition, but that is the least of the issues for those claiming that Israel is unleashing apartheid on the citizens of the West Bank. The important distinction to make with both of these definitions, and one that Goldstone brought up in his op-ed, is that Israel has no intention or desire of maintaining that domination, not to mention that Israeli forces consist of more than just Jews, but also Muslims, Christians, and Druzes. These are not mere semantics, but critical distinctions that take Israel's behavior in the West Bank out of the realm of the legal definition of apartheid.

While there are aspects of Israel's presence in the West Bank that can be construed as cumbersome or unfair, the South African regime was racially motivated in its actions, whereas the Israeli government is motivated to stop the incessant, Palestinian terror that it has reeked and continues to reek on Israel. Any de facto separation is due to security needs, not racism. Plus, there is a baselessness in the claim of Israeli apartheid when Abbas is working on getting international recognition of Palestine as a state. An Israeli security presence does not mean Israeli domination, let alone apartheid. If that were the case, then an American presence in Afghanistan would be apartheid, and the terroristic measures that Palestinians have inflicted on Israelis over the years would have to be defined as apartheid because those measures define where Israelis go, when schools open and close, and affect when people suddenly have to run to bomb shelters. While it is true that Israel conducts security operations in the West Bank, it is also true that the Palestinian Authority also has its own security forces, central bank, top-level Internet domain name, and its own executive branch. If these institutions exist, and if there is international recognition that Palestine (or at least the West Bank) is its own state, then that implies sovereignty. You can have sovereignty or you can be ruled by another country, but logically or legally speaking, you cannot simultaneously have both. Whether naysayers like it or not, the decisions of the Palestinian government dictate the vast majority of what takes place in the daily lives of Palestinians.

The ideal, certainly from a libertarian perspective, is that there is liberalized trade and flow of people. However, legitimate security concerns, much like exist within the Israeli-Palestinian conflict, impede such a laissez faire actualization since it would mean a free flow of weapons used to terrorize Israeli citizens. Until there can be a viable peace process, or at least until Israel can stop being under the threat of attack from Gaza and West Bank, the continued stalemate is going to be that those in the West Bank will continue to feel oppressed, and the Israelis will continue to view the security wall and checkpoints as valid security measures. Much like with calling the West Bank an occupied territory (it's actually a disputed territory....major difference!), the term is as false as it is counterproductive. Ultimately, I hope and pray that leaders on both sides can come together to find a feasible, lasting solution to peace so that those in the region can lead [relatively] harmonious lives instead of being in a constant state of conflict.

However, anti-Israel activists have taken a different approach. The emphasis is less on what happens to Arabs in Israel proper, and more so as to what happens to Palestinians in West Bank and Gaza. Gaza is simpler to explain. The majority of Gazans elected the terrorist organization known as Hamas into power in 2007. Unsurprisingly, there have not been elections with the Palestinian Authority since then. Hamas is an anti-Semitic entity hellbent on wiping out Israel. There is no moral equivalency between Hamas and Israel. Hamas is fixated on the goal of Israel's annihilation, and aside from the Israelis who are victims of Hamas' attacks, the citizens within the Gaza Strip also suffer immensely from such totalitarian rule.

What about the West Bank? As Judge Richard Goldstone, the man infamous for the Goldstone Commission that inaccurately labeled Operation Cast Lead (Goldstone since redacted the report), put it in his New York Times op-ed regarding the slanderous claim of Israel being an apartheid state, "The situation in the West Bank is more complex." Not that I am fan of the United Nations, but the map below illustrates just how much of a mess the West Bank is. Per the Oslo Accords, Israel and the Palestinian government agreed to divide the West Bank into three areas: Area A, Area B, and Area C. While Area A accounts for a small percentage of the geographical area, it still accounts for the majority of the population in the West Bank. In Area A, the Palestinian Authority exercises full civil and security control. Under Area A, the Fatah government deprives its citizens of its most basic of liberties, as is illustrated by its Freedom House ranking, so it's not the issue of the Israeli government depriving Palestinians of basic rights, but rather largely on the Palestinian government itself. Area B consists of full Palestinian civil control and going Israeli-Palestinian security control. Area C, which accounts for the majority of the West Bank in terms of geographical area, is under full Israeli control for security, planning, and construction.

The problem that these seemingly arbitrary divisions cause for the citizens in the West Bank is that the checkpoints throughout limit travel within the West Bank. The checkpoints also came with a wall along the 1948 Armistice Line, which anti-Israel activists have derisively called the "apartheid wall." I think it's noteworthy to recall when the wall was erected in the first place. Since the Six-Day War of 1967, Israel was able to avoid building a security wall for over three decades. It wasn't until the Second Intifada of the early 2000's when terrorist attacks originating from the West Bank were so numerous and severe that it necessitated the creation of the security wall. The number of terrorist attacks dropped precipitously once the wall was erected, and has served as a legitimate barrier to largely prevent terrorist attacks from plaguing Israel. The Israeli Supreme Court has made rulings to minimize unreasonable burdens of the checkpoints and security wall (see here, here, and here), which is another example of Israeli accommodation to a complex situation. But's let's be mindful of the fact that West Bank Palestinians are not citizens of Israel, and the 92,000 Palestinians who do enter Israel to work thusly need permission to enter Israel proper. Let's also remember that the Palestinian government has been presented with multiple opportunities to create an independent state under a two-state solution, which would eliminate the need for an Israeli security presence, but the Palestinian government has rejected each opportunity.

The question the remains is whether such measures constitute as apartheid, which means we need to go back to the definition of apartheid. The Rome Statute of International Criminal Court of 1998 (Article 7, Section 2h) defines apartheid as "inhumane acts of a character similar to those referred to in paragraph 1, committed in the context of an institutionalized regime of systematic oppression and domination by one racial group over any other racial group or groups and committed with the intention of maintaining that regime." The United Nations General Assembly in 1973 defined it as "inhuman acts committed for the purpose of establishing and maintaining domination by one racial group of persons over any other racial group of persons and systematically oppressing them." Technically, the Palestinians aren't a race, which is one way this doesn't fall under the legal definition, but that is the least of the issues for those claiming that Israel is unleashing apartheid on the citizens of the West Bank. The important distinction to make with both of these definitions, and one that Goldstone brought up in his op-ed, is that Israel has no intention or desire of maintaining that domination, not to mention that Israeli forces consist of more than just Jews, but also Muslims, Christians, and Druzes. These are not mere semantics, but critical distinctions that take Israel's behavior in the West Bank out of the realm of the legal definition of apartheid.

While there are aspects of Israel's presence in the West Bank that can be construed as cumbersome or unfair, the South African regime was racially motivated in its actions, whereas the Israeli government is motivated to stop the incessant, Palestinian terror that it has reeked and continues to reek on Israel. Any de facto separation is due to security needs, not racism. Plus, there is a baselessness in the claim of Israeli apartheid when Abbas is working on getting international recognition of Palestine as a state. An Israeli security presence does not mean Israeli domination, let alone apartheid. If that were the case, then an American presence in Afghanistan would be apartheid, and the terroristic measures that Palestinians have inflicted on Israelis over the years would have to be defined as apartheid because those measures define where Israelis go, when schools open and close, and affect when people suddenly have to run to bomb shelters. While it is true that Israel conducts security operations in the West Bank, it is also true that the Palestinian Authority also has its own security forces, central bank, top-level Internet domain name, and its own executive branch. If these institutions exist, and if there is international recognition that Palestine (or at least the West Bank) is its own state, then that implies sovereignty. You can have sovereignty or you can be ruled by another country, but logically or legally speaking, you cannot simultaneously have both. Whether naysayers like it or not, the decisions of the Palestinian government dictate the vast majority of what takes place in the daily lives of Palestinians.

The ideal, certainly from a libertarian perspective, is that there is liberalized trade and flow of people. However, legitimate security concerns, much like exist within the Israeli-Palestinian conflict, impede such a laissez faire actualization since it would mean a free flow of weapons used to terrorize Israeli citizens. Until there can be a viable peace process, or at least until Israel can stop being under the threat of attack from Gaza and West Bank, the continued stalemate is going to be that those in the West Bank will continue to feel oppressed, and the Israelis will continue to view the security wall and checkpoints as valid security measures. Much like with calling the West Bank an occupied territory (it's actually a disputed territory....major difference!), the term is as false as it is counterproductive. Ultimately, I hope and pray that leaders on both sides can come together to find a feasible, lasting solution to peace so that those in the region can lead [relatively] harmonious lives instead of being in a constant state of conflict.

Thursday, March 24, 2016

Mishloach Manot: Why Give Food on Purim?

'Tis the season of giving. No, I'm not referring to Christmas. I am referring to the Jewish holiday of Purim. Under Jewish law, there are four mitzvahs related to the holiday of Purim. The first two are hearing the reading of the Megillah and having a festive meal, also known as a seudah, towards the end of the holiday. The other two mitzvahs have to do with giving. One is to give [money] to at least two poor people, and that money needs to be enough for a meal (מתנות לאביונים). Today, I would like to focus on the fourth mitzvah: mishloach manot (משלוח מנות). Literally meaning "sending of portions," the practice of mishloach manot entails sending gift baskets of food and drink to family, friends, or other people in one's life. Unlike with the mitzvah of giving [money] to the poor, this mitzvah specifically involves giving food and drink. But why? Why does it have to be food and drink, and why does the food and drink need to be ready to eat?

The origins of Mishloach Manot are intriguing, as are the laws behind it. The prooftext used for the practice is the Book of Esther (9:19), where it says that the 14th of the month of Adar is a day of gladness and feasting, of joy, and "and of sending portions to one another" (ומשלח מנות איש לרעהו). As for the reasoning behind the mitzvah, one reason is provided by 15 c. rabbi Yisrael Isserlin, in which he said that the food was to ensure that each individual had enough food to fulfill the mitzvah of the Purim seudah. This explanation has some difficulties not only because the recipients of the gift baskets can be either rich or poor, but also because it is a statistical likelihood that at least one person will not receive a gift basket. On the other hand, that could also explain why giving money to the poor is also a Purim mitzvah: even if a poor person does not receive mishloach manot, they would still receive money to celebrate Purim. And even if they do receive food on Purim, they can use the extra money to ease their financial woes. This explanation also bolsters the reason as to why mishloach manot has to come in the form of food, as opposed to some other good, e.g., clothing.

There is also a second and complementary reason that is traditionally provided for the practice. According to 16th-century rabbi Shlomo Alkabetz (Manot Ha Levi), the practice is about engendering goodwill and a sense of Jewish unity. In the Book of Esther (3:8), Haman described the Jewish people as "one nation dispersed and divided." While giving tzedakah is preferably done under anonymity, the mitzvah of mishloach manot is not complete unless one knows the identity of the giver since the purpose is to create goodwill towards others, regardless of socio-economic status. This is certainly not to say that we disregard non-Jews (because Jewish law tells us to also have respect for non-Jews, help non-Jews and have interpersonal relations with non-Jews). There is a time for universal values and interaction with the greater world (e.g., it is permissible to give to non-Jewish poor people in order to fulfill the Purim mitzvah of מתנות לאביונים), and there is a time for interactions with one's fellow Jew. Giving mishloach manot is a time to focus on the latter because while one of the goals of Judaism is engendering a more universal, moralistic ethos, one still has to start somewhere, and given the shared experience that Jews share, Jewish unity is a good place to start.

I've touched upon this idea before, and it is an idea that can be expressed in the adage of "three Jews, five opinions", which is that Jewish unity is a hard thing to come by. There are secular Jews who look at religious Jews as crazy, and there are religious Jews who look at non-Orthodox Jews as heretical. Regardless of where a Jew falls on the religious spectrum, there is a tendency to look at another Jew's practice as either too lax or too stringent. While the phenomenon of judging others based on disagreements or disputes is not unique to religious life (e.g., reactions to the 2016 United States presidential election), we are meant to use mishloach manot as a means to transcend such conflict and come together as a people. Abraham Lincoln was one to say that "a house divided against itself cannot stand," and the same goes for the Jewish people. Being Infinite Oneness, G-d is the perfect example of unity. While the Jewish people cannot exhibit unity to that extent, there is still something to be said for unity since greater cohesion leads to greater efficiency, whether that is in terms of doing particularistic mitzvahs (e.g., kosher food, Shabbat) or more universal mitzvahs, such as helping the poor or loving one's neighbor.

Mishloach manot is not just an expression of Jewish unity, but in more general terms, of giving and doing acts of loving-kindness for others. Purim is meant to be a holiday of joy. Giving is meant to be a manifestation of that joy, of that ability to bring people together. Through the act of giving mishloach manot, we are reminded that unity is more important than divisiveness, peace more important than conflict, and joy more important than misery.

The origins of Mishloach Manot are intriguing, as are the laws behind it. The prooftext used for the practice is the Book of Esther (9:19), where it says that the 14th of the month of Adar is a day of gladness and feasting, of joy, and "and of sending portions to one another" (ומשלח מנות איש לרעהו). As for the reasoning behind the mitzvah, one reason is provided by 15 c. rabbi Yisrael Isserlin, in which he said that the food was to ensure that each individual had enough food to fulfill the mitzvah of the Purim seudah. This explanation has some difficulties not only because the recipients of the gift baskets can be either rich or poor, but also because it is a statistical likelihood that at least one person will not receive a gift basket. On the other hand, that could also explain why giving money to the poor is also a Purim mitzvah: even if a poor person does not receive mishloach manot, they would still receive money to celebrate Purim. And even if they do receive food on Purim, they can use the extra money to ease their financial woes. This explanation also bolsters the reason as to why mishloach manot has to come in the form of food, as opposed to some other good, e.g., clothing.

There is also a second and complementary reason that is traditionally provided for the practice. According to 16th-century rabbi Shlomo Alkabetz (Manot Ha Levi), the practice is about engendering goodwill and a sense of Jewish unity. In the Book of Esther (3:8), Haman described the Jewish people as "one nation dispersed and divided." While giving tzedakah is preferably done under anonymity, the mitzvah of mishloach manot is not complete unless one knows the identity of the giver since the purpose is to create goodwill towards others, regardless of socio-economic status. This is certainly not to say that we disregard non-Jews (because Jewish law tells us to also have respect for non-Jews, help non-Jews and have interpersonal relations with non-Jews). There is a time for universal values and interaction with the greater world (e.g., it is permissible to give to non-Jewish poor people in order to fulfill the Purim mitzvah of מתנות לאביונים), and there is a time for interactions with one's fellow Jew. Giving mishloach manot is a time to focus on the latter because while one of the goals of Judaism is engendering a more universal, moralistic ethos, one still has to start somewhere, and given the shared experience that Jews share, Jewish unity is a good place to start.

I've touched upon this idea before, and it is an idea that can be expressed in the adage of "three Jews, five opinions", which is that Jewish unity is a hard thing to come by. There are secular Jews who look at religious Jews as crazy, and there are religious Jews who look at non-Orthodox Jews as heretical. Regardless of where a Jew falls on the religious spectrum, there is a tendency to look at another Jew's practice as either too lax or too stringent. While the phenomenon of judging others based on disagreements or disputes is not unique to religious life (e.g., reactions to the 2016 United States presidential election), we are meant to use mishloach manot as a means to transcend such conflict and come together as a people. Abraham Lincoln was one to say that "a house divided against itself cannot stand," and the same goes for the Jewish people. Being Infinite Oneness, G-d is the perfect example of unity. While the Jewish people cannot exhibit unity to that extent, there is still something to be said for unity since greater cohesion leads to greater efficiency, whether that is in terms of doing particularistic mitzvahs (e.g., kosher food, Shabbat) or more universal mitzvahs, such as helping the poor or loving one's neighbor.

Mishloach manot is not just an expression of Jewish unity, but in more general terms, of giving and doing acts of loving-kindness for others. Purim is meant to be a holiday of joy. Giving is meant to be a manifestation of that joy, of that ability to bring people together. Through the act of giving mishloach manot, we are reminded that unity is more important than divisiveness, peace more important than conflict, and joy more important than misery.

Monday, March 21, 2016

Negative Interest Rates: Should the Federal Reserve Implement Them?

Monetary policy has been interesting in the past few years because of some of the unconventional tools that were used since the Great Recession, most notably with quantitative easing. Now another unconventional tool is making its way around the policy world: negative interest rates. The payment on top of a principal loan from a borrower to a lender to borrow money is known as interest. In banking, the interest rate is the percentage of principal that the lender charges the borrower to use the money for a certain period of time. Traditionally speaking, a customer or financial institution puts their money in the bank to get a return on allowing for banks to borrow their money. The economy has gotten so bad that the interest rates that certain central banks are charging are now negative.

Take Japan as an example. At the end of January 2016, the Bank of Japan implemented a -0.1 percent interest rate, which is to say that the BOJ is charging a 0.1 percent fee to store its money with the BOJ. Upon this announcement, the TOPIX Banks Exchange Traded Fund, which is a Japanese exchange-traded fund, dropped about 28 percent for about the past month before it even began started recovering. Japan's central bank is not the only central bank to charge negative interest rates. There is the Eurozone, Switzerland, Sweden, and Denmark whose interest rates have gone sub-zero. The reasons why these European entities are going for negative interest rates: The European Central Bank is trying to prevent deflation, Denmark wants to maintain its peg to the euro, Switzerland wants to disincentivize currency appreciation via mass foreign inflows, and Sweden wants to create inflation. Even if successful, these implications would provide few insights for the United States, which I'll elucidate upon momentarily.

Essentially, central banks are charging institutional depositors [above a certain reserve threshold] instead of paying them. Although it might seem counterintuitive because cash carries an implicit rate of 0 percent, some would still store money at a negative interest rate because money, especially in large sums, can be quite costly and risky to store. A modest fee can be viewed as better than the alternative. There are a couple of purposes to negative interest rates. One is to help meet inflation targets set by the central bank to make sure there is neither hyperinflation nor deflation, and the other is to nudge commercial banks to lend more money to businesses and consumers (instead of racking up large balance sheets with the central bank) in order to generate a wealth effect. Lower interest rates make savings less attractive and borrowing more attractive, which is what these central banks are trying to encourage. Since oil prices have plummeted and global economic growth is very modest, these central banks are not worried about the inflationary pressures that would typically accompany such policy (more on that below).

We see that some other countries are doing it, but there is a question of whether such policy would take place in the United States. Federal Reserve Chairwoman Janet Yellen stated back in February that they're not off the table, but it looks like that after her press conference last week, negative interest rates are not being discussed. In practice, I'm presently not worried that Yellen would actually go the route of negative interest rates because a) the Federal Reserve recently increased interest rates for the first time in about a decade, and b) a lot would need to go wrong in the economy for Yellen to even consider it, let alone actually do it. While the Federal Reserve is not making any moves towards negative interest rates, former Federal Reserve Chairman Ben Bernanke published a policy brief last week as to whether the United States Federal Reserve should use that tool in the event of an economic slowdown.

As Bernanke points out, it's not the first time the United States has dealt with real interest rates that were negative. It's now that we are talking negative nominal interest rates is where the issue lies (here is an explanation between the difference between nominal and real). Although I can see the intuition behind central banks instituting negative interest rates, I can also see the reasoning behind the concerns expressed by the Economist, Bloomberg, and the Wharton School of Business, the latter of which is ranked as one of the top business schools in the country. If the commercial bank decides to pass on the cost of the negative interest rate to the customer, customers are more likely to pull their money out of the bank. In a worst-case scenario, that could lead to a bank run, which would be disastrous. This would be quite ironic considering that one of the reasons for such a policy is to encourage freer flow of funds throughout the financial sector. Even if the banks absorb the costs, the loss of profit could lead to downward pressure on bank stocks, which would mess up the global equity markets. Additionally, it would make banks more recalcitrant to lend money in the future. This could also affect foreign exchange markets because the bigger the differential between a domestic negative interest rate and a positive interest rate in another county will only incentivize investment abroad, which would exacerbate the already-anemic economic growth, particularly in Europe and Japan. If investors do seek better rates of return in other countries, it would lead to currency depreciation of the domestic country. As a matter of fact, we have seen the euro depreciate in comparison to the dollar since the European Central Bank set negative interest rates back in mid-2014. Much like with low, but positive interest rates, the World Bank has expressed concerns about the undesirable effects on capital market functioning and the erosion of bank profitability. The Bank for International Settlements (BIS) expressed concern in a policy brief earlier this month by saying that prolonged negative interest rates come with "great uncertainty."

As the BIS report points out, there is greater wanting for more empirical evidence on the matter, which makes sense considering how new of a monetary policy tool this is. Even so, I still have to retain my skepticism. For one, the United States has already tried to lower interest rates, as well as trying to use quantitative easing to boost economic growth, with little success. Not only would negative interest rates be just a larger attempt to encourage borrowing via interest rates, but it would disincentivize savings because although central banks can only control short-term interest rates, they do have an effect on long-term interest rates. Another reason for skepticism is because there has not been a time in the history of monetary policy where a country generated wealth through devaluing its currency. Regardless of whether we are talking about Japan, Europe, or the United States, I think that there are tax and regulatory reforms that could be implemented without resorting to this currency manipulation. Whether we like it or not, negative interest rates are not going away anytime soon. I would like to see what the empirical evidence has to say on the matter, but at the same time, I'm not going to hold my breath for positive results.

9-10-2016 Addendum: The Brookings Institution recently published an article arguing that negative interest rates are not effective.

Take Japan as an example. At the end of January 2016, the Bank of Japan implemented a -0.1 percent interest rate, which is to say that the BOJ is charging a 0.1 percent fee to store its money with the BOJ. Upon this announcement, the TOPIX Banks Exchange Traded Fund, which is a Japanese exchange-traded fund, dropped about 28 percent for about the past month before it even began started recovering. Japan's central bank is not the only central bank to charge negative interest rates. There is the Eurozone, Switzerland, Sweden, and Denmark whose interest rates have gone sub-zero. The reasons why these European entities are going for negative interest rates: The European Central Bank is trying to prevent deflation, Denmark wants to maintain its peg to the euro, Switzerland wants to disincentivize currency appreciation via mass foreign inflows, and Sweden wants to create inflation. Even if successful, these implications would provide few insights for the United States, which I'll elucidate upon momentarily.

Essentially, central banks are charging institutional depositors [above a certain reserve threshold] instead of paying them. Although it might seem counterintuitive because cash carries an implicit rate of 0 percent, some would still store money at a negative interest rate because money, especially in large sums, can be quite costly and risky to store. A modest fee can be viewed as better than the alternative. There are a couple of purposes to negative interest rates. One is to help meet inflation targets set by the central bank to make sure there is neither hyperinflation nor deflation, and the other is to nudge commercial banks to lend more money to businesses and consumers (instead of racking up large balance sheets with the central bank) in order to generate a wealth effect. Lower interest rates make savings less attractive and borrowing more attractive, which is what these central banks are trying to encourage. Since oil prices have plummeted and global economic growth is very modest, these central banks are not worried about the inflationary pressures that would typically accompany such policy (more on that below).

We see that some other countries are doing it, but there is a question of whether such policy would take place in the United States. Federal Reserve Chairwoman Janet Yellen stated back in February that they're not off the table, but it looks like that after her press conference last week, negative interest rates are not being discussed. In practice, I'm presently not worried that Yellen would actually go the route of negative interest rates because a) the Federal Reserve recently increased interest rates for the first time in about a decade, and b) a lot would need to go wrong in the economy for Yellen to even consider it, let alone actually do it. While the Federal Reserve is not making any moves towards negative interest rates, former Federal Reserve Chairman Ben Bernanke published a policy brief last week as to whether the United States Federal Reserve should use that tool in the event of an economic slowdown.

As Bernanke points out, it's not the first time the United States has dealt with real interest rates that were negative. It's now that we are talking negative nominal interest rates is where the issue lies (here is an explanation between the difference between nominal and real). Although I can see the intuition behind central banks instituting negative interest rates, I can also see the reasoning behind the concerns expressed by the Economist, Bloomberg, and the Wharton School of Business, the latter of which is ranked as one of the top business schools in the country. If the commercial bank decides to pass on the cost of the negative interest rate to the customer, customers are more likely to pull their money out of the bank. In a worst-case scenario, that could lead to a bank run, which would be disastrous. This would be quite ironic considering that one of the reasons for such a policy is to encourage freer flow of funds throughout the financial sector. Even if the banks absorb the costs, the loss of profit could lead to downward pressure on bank stocks, which would mess up the global equity markets. Additionally, it would make banks more recalcitrant to lend money in the future. This could also affect foreign exchange markets because the bigger the differential between a domestic negative interest rate and a positive interest rate in another county will only incentivize investment abroad, which would exacerbate the already-anemic economic growth, particularly in Europe and Japan. If investors do seek better rates of return in other countries, it would lead to currency depreciation of the domestic country. As a matter of fact, we have seen the euro depreciate in comparison to the dollar since the European Central Bank set negative interest rates back in mid-2014. Much like with low, but positive interest rates, the World Bank has expressed concerns about the undesirable effects on capital market functioning and the erosion of bank profitability. The Bank for International Settlements (BIS) expressed concern in a policy brief earlier this month by saying that prolonged negative interest rates come with "great uncertainty."

As the BIS report points out, there is greater wanting for more empirical evidence on the matter, which makes sense considering how new of a monetary policy tool this is. Even so, I still have to retain my skepticism. For one, the United States has already tried to lower interest rates, as well as trying to use quantitative easing to boost economic growth, with little success. Not only would negative interest rates be just a larger attempt to encourage borrowing via interest rates, but it would disincentivize savings because although central banks can only control short-term interest rates, they do have an effect on long-term interest rates. Another reason for skepticism is because there has not been a time in the history of monetary policy where a country generated wealth through devaluing its currency. Regardless of whether we are talking about Japan, Europe, or the United States, I think that there are tax and regulatory reforms that could be implemented without resorting to this currency manipulation. Whether we like it or not, negative interest rates are not going away anytime soon. I would like to see what the empirical evidence has to say on the matter, but at the same time, I'm not going to hold my breath for positive results.

9-10-2016 Addendum: The Brookings Institution recently published an article arguing that negative interest rates are not effective.

Friday, March 18, 2016

Why Food Stamps Should Come With Work Requirements

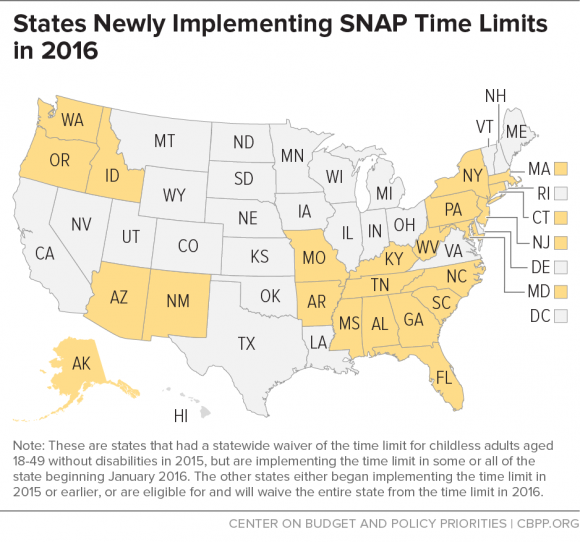

About two and a half years ago, I wrote a thorough piece on reforming the Supplemental Nutrition Assistance Program (SNAP), or what is commonly referred to as food stamps. I went through ten possible means of reform, and I concisely analyzed each one. Considering the issues that come with SNAP, one can only hope that reform takes place. Since I wrote that entry, one of the reforms actually came into fruition. Over the course of 2016, 23 states will have implemented time limits of three months on receiving SNAP benefits (see map below). This will bring the count to 40 states with time limits. The time limit statute originates from the 1996 welfare law, and had a provision that stated that states can waive the time limit on a state-by-state basis if unemployment is unusually high. Since unemployment has been dropping in many states, the waiver no longer applies for those states, hence the reinstatement of the time limits.

The purpose of the time limit via a work requirement is to incentivize individuals to regain employment shortly after losing their initial job. This incentive exists in order that individuals don't become dependent on government welfare, and so that what is supposed to be a safety net can remain solvent in the long-run. There are those, such as the analysts from the Left-leaning Center for Budget and Public Priorities (CBPP), that view the work requirements as too punitive. From the CBPP's point of view, the work requirements are a severe time limit that should not be punishing those who are actively looking for work, but simply cannot find work.

What comes off as punitive to some might actually be necessary for the longevity of the program. Much like with unemployment benefits, food stamps create a disincentive to work. Using the most recent data from the US Department of Agriculture (USDA), one can see that from 2008 to 2012, 52 percent of SNAP recipients had been on food stamps for over two years. Although the data are not as recent, we can use a time period in which a) countercyclical demand would boost the need for SNAP benefits, and b) a time period in which the vast majority of states removed their time limits. Although the Great Recession played a role, what really helped bolster SNAP benefit recipient numbers was increasingly lax eligibility requirements and active outreach from the USDA to recruit more recipients. Using USDA data [Table A.16], we can also see that 83.1 percent of adults who are capable of work and have no dependable children (ABAWDs) who are on SNAP don't work. A lack of work requirements reduces the number of people working (Hoynes and Schanzenbach, 2011). The State of Kansas serves as an example of how reinstating work requirements increased the likelihood of ABAWDs who are SNAP benefit recipients to actually go to work (Ingram and Horton, 2016).

Not only would this help individuals get off of government dependency, but it would help from a budgetary standpoint. Looking at Congressional Budget Office (CBO) projections, the budget outlays for SNAP are not expected to significantly decrease over the next decade. According to the Right-leaning Heritage Foundation, reinstating work requirements for ABAWDs would create an estimated savings of $9.7 billion annually. I would agree with the CBPP that there should be better job training opportunities for SNAP recipients (particularly for those who are extremely poor), but I still contend that social safety nets should not devolve into long-term dependency on government benefits. Social safety nets should encourage and expect people who are readily available to work to do so. Without such incentives as work requirements, we merely perpetuate the cycle of economic stagnation and frustration for a sizable number of Americans.

The purpose of the time limit via a work requirement is to incentivize individuals to regain employment shortly after losing their initial job. This incentive exists in order that individuals don't become dependent on government welfare, and so that what is supposed to be a safety net can remain solvent in the long-run. There are those, such as the analysts from the Left-leaning Center for Budget and Public Priorities (CBPP), that view the work requirements as too punitive. From the CBPP's point of view, the work requirements are a severe time limit that should not be punishing those who are actively looking for work, but simply cannot find work.

What comes off as punitive to some might actually be necessary for the longevity of the program. Much like with unemployment benefits, food stamps create a disincentive to work. Using the most recent data from the US Department of Agriculture (USDA), one can see that from 2008 to 2012, 52 percent of SNAP recipients had been on food stamps for over two years. Although the data are not as recent, we can use a time period in which a) countercyclical demand would boost the need for SNAP benefits, and b) a time period in which the vast majority of states removed their time limits. Although the Great Recession played a role, what really helped bolster SNAP benefit recipient numbers was increasingly lax eligibility requirements and active outreach from the USDA to recruit more recipients. Using USDA data [Table A.16], we can also see that 83.1 percent of adults who are capable of work and have no dependable children (ABAWDs) who are on SNAP don't work. A lack of work requirements reduces the number of people working (Hoynes and Schanzenbach, 2011). The State of Kansas serves as an example of how reinstating work requirements increased the likelihood of ABAWDs who are SNAP benefit recipients to actually go to work (Ingram and Horton, 2016).

Not only would this help individuals get off of government dependency, but it would help from a budgetary standpoint. Looking at Congressional Budget Office (CBO) projections, the budget outlays for SNAP are not expected to significantly decrease over the next decade. According to the Right-leaning Heritage Foundation, reinstating work requirements for ABAWDs would create an estimated savings of $9.7 billion annually. I would agree with the CBPP that there should be better job training opportunities for SNAP recipients (particularly for those who are extremely poor), but I still contend that social safety nets should not devolve into long-term dependency on government benefits. Social safety nets should encourage and expect people who are readily available to work to do so. Without such incentives as work requirements, we merely perpetuate the cycle of economic stagnation and frustration for a sizable number of Americans.

Wednesday, March 16, 2016

Trump's Tariff Tomfoolery: Whatever Happened to Free Trade?

Donald J. Trump, who is currently the forerunner for the Republican presidential race, has made quite a few controversial statements since he started running for president. I thought it was bad enough when he proposed mass deportation of undocumented immigrants, but his statements on trade have made me scratch my head as of late. Trump has gone as far as saying we need to put a 45 percent tariff on China, as well as a 35 percent tariff on Ford vehicles coming from Mexico. Trump's idea is that a tariff would encourage production to come to the United States in the hopes to "make America great again." Trump is playing to the populist idea that foreign competition causes a loss of American jobs and stagnates American wages. That much is certain. Even forgetting that outsourcing is nowhere nearly as bad as opponents make it out to be or that "Buy American" is economically inefficient, would tariffs have the effects that Trump is promising?

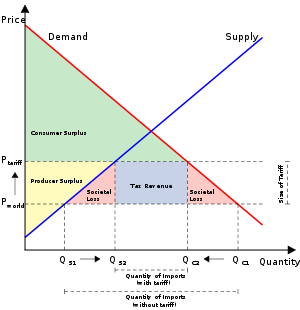

First, a brief explanation of a tariff. Essentially, a tariff is a tax or duty on goods entering or leaving the country. These taxes on imported or exported goods are meant to increase the price of a good, thereby making it more difficult to leave the country. As the supply-demand graph of a domestic country shows below, the benefits are unevenly spread out. Most obvious is that the government collects tax revenue. Domestic industries also benefit since they have reduced competition. The real losers of a tariff are the consumer since tariffs result in higher prices of goods. There is deadweight loss (also referred to as societal loss) that takes place with a tariff, much like it does with any other tax. Unsurprisingly, the tariff benefits the few [who are the politically connected] at the expense of the American consumer.

This is not simply a matter of economic theory or general consensus amongst economists that tariffs reduce economic welfare, thereby lowering the standard of living. The Right-leaning American Action Forum released a policy brief last week on Trump's proposal to significantly raise tariffs on China and Mexico by 45 and 35 percent, respectively. The end result could end up costing Americans up to $250 billion per year! This is hardly the first time that tariffs have been shown to have an adverse impact on citizens. A few more examples:

While there are many more studies that can be elucidated upon, the point I am making is that study after study shows that tariffs are problematic. As economists have pointed out, freer trade is better for the economic wellbeing as a whole. Rather than give into protectionist myths and scare tactics about losing jobs, we should encourage presidential hopefuls, and indeed all politicians, to liberalize trade.

3-27-2016 Addendum: In case there were not enough reasons to be worried about Trump's tariff plan, the chief analyst at Moody's Analytics just ran a model with Trump's proposed tariff rates, and the end result would be over 3 million less American jobs and an economy that would be 4.6 percent smaller by 2019.

9-25-2016 Addendum: The Peterson Institute recently released a paper on the presidential candidates and what would happen if their trade plans were fully implemented. For Trump, his trade plan of a full-out trade war would mean recession and increased unemployment.

10-6-2016 Addendum: The University of Chicago's Initiative on Global Market's panel of economic experts were asked if tariffs were good policy, and not a single one thought that enacting tariffs to encourage production in the United States is a good idea. Nice to see economists agree on something!

1-17-2017 Addendum: New research (Furman et al., 2017) shows how tariffs act as a regressive tax that most adversely affects the poor.

First, a brief explanation of a tariff. Essentially, a tariff is a tax or duty on goods entering or leaving the country. These taxes on imported or exported goods are meant to increase the price of a good, thereby making it more difficult to leave the country. As the supply-demand graph of a domestic country shows below, the benefits are unevenly spread out. Most obvious is that the government collects tax revenue. Domestic industries also benefit since they have reduced competition. The real losers of a tariff are the consumer since tariffs result in higher prices of goods. There is deadweight loss (also referred to as societal loss) that takes place with a tariff, much like it does with any other tax. Unsurprisingly, the tariff benefits the few [who are the politically connected] at the expense of the American consumer.

This is not simply a matter of economic theory or general consensus amongst economists that tariffs reduce economic welfare, thereby lowering the standard of living. The Right-leaning American Action Forum released a policy brief last week on Trump's proposal to significantly raise tariffs on China and Mexico by 45 and 35 percent, respectively. The end result could end up costing Americans up to $250 billion per year! This is hardly the first time that tariffs have been shown to have an adverse impact on citizens. A few more examples:

- According to a paper from the National Economic Research Bureau, a freer economy translates into greater economic growth (Estevadeordal and Taylor, 2008).

- The Right-leaning Heritage Foundation shows how tariffs make Americans poorer and with lower economic welfare (Riley, 2013).

- The Federal Reserve found that economic welfare losses of tariffs were high enough to actually encourage monopolies (Schmitz, 2012).

- Developing nations with lower trade barriers have lower poverty rates (Bergh and Nillson, 2011), which was notable in Mexico (Hanson, 2005), India (Goldberg, 2009), Vietnam (Heo and Doanh, 2009), and Indonesia (Kis-Katos and Sparrow, 2013).

- Fewer trade barriers positively correlate with greater happiness (Kaupa, 2012).

- The Federal Reserve Bank has shown that the United States has, on the whole, benefitted from trade with China (Caliendo et al., 2015).

- Greater trade helps the poor by lowering cost of living (Fajgelbaum and Khandelwal, 2015; Marchand, 2012).

- A World Bank Study shows that liberalizing trade, as opposed to higher tariffs, means greater economic growth (Wacziarg and Horn Welch, 2008).

- The World Health Organization found that pharmaceutical tariffs are a regressive tax that adversely targets the sick (Olcay and Laing, 2005).

- Greater international trade is correlated with a higher per capita income (Feyrer, 2009).

- This policy brief from the Mercatus Center addresses some of the myths surrounding free trade (Boudreaux, 2015).

While there are many more studies that can be elucidated upon, the point I am making is that study after study shows that tariffs are problematic. As economists have pointed out, freer trade is better for the economic wellbeing as a whole. Rather than give into protectionist myths and scare tactics about losing jobs, we should encourage presidential hopefuls, and indeed all politicians, to liberalize trade.

3-27-2016 Addendum: In case there were not enough reasons to be worried about Trump's tariff plan, the chief analyst at Moody's Analytics just ran a model with Trump's proposed tariff rates, and the end result would be over 3 million less American jobs and an economy that would be 4.6 percent smaller by 2019.

9-25-2016 Addendum: The Peterson Institute recently released a paper on the presidential candidates and what would happen if their trade plans were fully implemented. For Trump, his trade plan of a full-out trade war would mean recession and increased unemployment.

10-6-2016 Addendum: The University of Chicago's Initiative on Global Market's panel of economic experts were asked if tariffs were good policy, and not a single one thought that enacting tariffs to encourage production in the United States is a good idea. Nice to see economists agree on something!

1-17-2017 Addendum: New research (Furman et al., 2017) shows how tariffs act as a regressive tax that most adversely affects the poor.

Labels:

Economy,

Foreign Affairs and International Studies,

Free Markets,

International Trade,

Tariffs,

Taxes,

Trump

Friday, March 4, 2016

Parsha Vayachel: Why Prohibit Lighting Fires on Shabbat? For Gratitude's Sake

There is an old proverb: "Kindle not a fire you cannot extinguish." Fire plays quite the role as a literary device. After all, fire has as much potential to destroy as it does to create warmth and energy. It also plays a role in this week's Torah portion. At the beginning of this week's Torah portion (Exodus 35), Moses talks about the Tabernacle, specifically how to contribute to it and how to construct. However, before beginning with those details, Moses provides a two-verse segue about the Shabbat. In it, he reminds the Jewish people about how it's forbidden to do work on the seventh day (i.e., Shabbat), and that whoever does so will be "put to death (Exodus 35:2)". He then brings up the example of not kindling any fire on Shabbat (ibid. 35:3). The only other act in the Torah that is explicitly mentioned [as prohibited for Shabbat] is gathering wood (Numbers 15:32-36). For those who observe Shabbat or are even aware of the laws governing Shabbat, there are way more than just two laws about Shabbat. There are 39 main types of acts that are prohibited, which can be divided into literally hundreds of laws. My question for today is as follows: why is it that Moses singles out the act of kindling a fire?

Before continuing, I have to preface that when referring to "work," we're not talking about going into the office for your "9-5 job on Monday to Friday". The Hebrew word for that type of work is avodah (עבודה). The word used in Exodus 35:2, melachah (מלאכה), refers to creative acts (also see Exodus 35:33). It's because of the juxtaposition between Shabbat and the Tabernacle in this verse that the Sages [in the Mishnah] interpreted melacha to mean the acts used to build the Tabernacle. Juxtaposition is a standard hermeneutical tool to interpret Torah. However, I find it to be a problematic interpretation because many "creative acts" were not included in the list, including setting the table, washing before and after eating, and cleaning up. Even if the laws surrounding Shabbat evolved in a more arbitrary fashion, let's return to the initial question: Why is the prohibition of kindling fire singled out in this passage?

One reason is, as Sforno pointed out, that fire was necessary for completing so many acts of work. In pre-modern times, fire was one of the single greatest things to help with the advancement of civilization. Nowadays, electricity plays such a role. Even if you don't buy the argument that electricity scientifically functions the same as fire does (I don't!), it certainly has as much impact in 21st-century living as fire did in pre-modern times with regards to being able to create and advance (R. Samson Raphael Hirsch), which is one of the reasons I would argue that the prohibition of turning on electrical items during Shabbat still holds. To further elucidate upon this point, as the Sfat Emet illustrates, the prohibition of fire is to remind us that by not building and creating, we can better appreciate the work we do during the other six days of the week.

Rabbi Yeshaya Halevi Horowitz, also known as the Shlach HaKodesh, gives a more figurative interpretation by saying that the fire refers to the fire of anger and disputes. Shabbat is not just about physical rest. It is about a level of moral sanctity in which we are not meant to start such fires. With Shabbat, it is about conditioning one's mind and soul to develop a certain level of inner peace. Ideally, we should not be angry ever (Maimonides, Mishneh Torah, Hilchot De'ot, 2:3), but this holds especially true on a day where we are supposed to let go of our travails and our worries. The reason that this melachah is singled out is because Shabbat typically gives us more opportunities to interact with other people. We're not at our computers or glued to our smartphones. Whether it's going to shul or being around the Shabbat table for a meal, we have face-to-face interaction with people, which can be messy. We are meant to transcend that to build what R. Abraham Joshua Heschel called a "sanctuary in time."

These two interpretations of the verse teach us that we gain perspective on the world and how to interact with it as a result. We are not meant to just toil away and constantly work. We are not meant to be constantly in tension. Not being on the iPhone or working in front of a laptop means stopping the rat race for a day and taking the time to appreciate what's around you. And not starting arguments or disputes means we can let go and be in the moment. It means the petty or trivial is not as important as family, camaraderie, or seeking holiness in an otherwise mundane world. By not igniting fire on Shabbat, whether they may be literal, symbolic, or figurative, we can express our gratitude for what we have and what we have accomplished.

Before continuing, I have to preface that when referring to "work," we're not talking about going into the office for your "9-5 job on Monday to Friday". The Hebrew word for that type of work is avodah (עבודה). The word used in Exodus 35:2, melachah (מלאכה), refers to creative acts (also see Exodus 35:33). It's because of the juxtaposition between Shabbat and the Tabernacle in this verse that the Sages [in the Mishnah] interpreted melacha to mean the acts used to build the Tabernacle. Juxtaposition is a standard hermeneutical tool to interpret Torah. However, I find it to be a problematic interpretation because many "creative acts" were not included in the list, including setting the table, washing before and after eating, and cleaning up. Even if the laws surrounding Shabbat evolved in a more arbitrary fashion, let's return to the initial question: Why is the prohibition of kindling fire singled out in this passage?

One reason is, as Sforno pointed out, that fire was necessary for completing so many acts of work. In pre-modern times, fire was one of the single greatest things to help with the advancement of civilization. Nowadays, electricity plays such a role. Even if you don't buy the argument that electricity scientifically functions the same as fire does (I don't!), it certainly has as much impact in 21st-century living as fire did in pre-modern times with regards to being able to create and advance (R. Samson Raphael Hirsch), which is one of the reasons I would argue that the prohibition of turning on electrical items during Shabbat still holds. To further elucidate upon this point, as the Sfat Emet illustrates, the prohibition of fire is to remind us that by not building and creating, we can better appreciate the work we do during the other six days of the week.

Rabbi Yeshaya Halevi Horowitz, also known as the Shlach HaKodesh, gives a more figurative interpretation by saying that the fire refers to the fire of anger and disputes. Shabbat is not just about physical rest. It is about a level of moral sanctity in which we are not meant to start such fires. With Shabbat, it is about conditioning one's mind and soul to develop a certain level of inner peace. Ideally, we should not be angry ever (Maimonides, Mishneh Torah, Hilchot De'ot, 2:3), but this holds especially true on a day where we are supposed to let go of our travails and our worries. The reason that this melachah is singled out is because Shabbat typically gives us more opportunities to interact with other people. We're not at our computers or glued to our smartphones. Whether it's going to shul or being around the Shabbat table for a meal, we have face-to-face interaction with people, which can be messy. We are meant to transcend that to build what R. Abraham Joshua Heschel called a "sanctuary in time."

These two interpretations of the verse teach us that we gain perspective on the world and how to interact with it as a result. We are not meant to just toil away and constantly work. We are not meant to be constantly in tension. Not being on the iPhone or working in front of a laptop means stopping the rat race for a day and taking the time to appreciate what's around you. And not starting arguments or disputes means we can let go and be in the moment. It means the petty or trivial is not as important as family, camaraderie, or seeking holiness in an otherwise mundane world. By not igniting fire on Shabbat, whether they may be literal, symbolic, or figurative, we can express our gratitude for what we have and what we have accomplished.

Tuesday, March 1, 2016

Why Supreme Court Justices Need Term Limits

Since the death of Supreme Court Justice Anton Scalia, the politics around the Supreme Court have been quite nasty. Contention about whether politicians have gotten away with denying Supreme Court nominees during an election year has been high. This sort of political tension is unique because the United States is the only developed nation where the justices of a federal judiciary system have lifetime tenure. Because of all the hullabaloo, a single, 18-year term for Supreme Court justices has become a popular policy alternative to lifetime tenure.

When the United States Supreme Court first started, the idea was to shield judges from political influence. Keep in mind this was when the Supreme Court had less power, and the life expectancy was nearly half what it is now. But perhaps being shielded from populist whims is a good idea, especially with an election where Trump and Sanders are two major presidential candidates who hope to shove their version of populism down the throats of the American people if elected. Perhaps making confirmation hearings more frequent would only make things more politically contentious than they already are.

Even if the level of contentiousness does not decrease, one could argue that term limits would lead to greater dynamism. A lack of turnover usually means deadened thinking, and the justices stay mentally sequestered in their own form of an ivory tower (although to be fair, even with an increasing amount of 5-4 rulings, less than 25 percent of Supreme Court rulings are 5-4 rulings). The other problem with such isolation is that justices can and do evolve into something that you didn't intend upon nomination. Justices John Paul Stevens, Anthony Kennedy, and John Roberts come to mind.

Justices are meant to enforce the law, strike down unconstitutional legislation, and guarantee constitutionally protected rights. When justices write laws from the bench, much like was done with the Obamacare cases of National Federation of Independent Businesses v. Sebelius (2012) and King v. Burwell (2015). If power corrupts and absolute power corrupts absolutely, imagine what lifetime tenure does. And let's not forget other issues, such as intellectual autopilot, decrepitude, diminished productivity, and eroded legitimacy by what has become a quasi-monarchical position with less accountability over time. As was asked so succinctly by Nobel Prize-winning economist Gary Becker: "Do we really want eighty-year olds, who have been removed from active involvement in other work or activities for decades, and who receive enormous deference, in large measure because of their great power, to be greatly influencing some of the most crucial social, economic, and political issues?" Not really, no.

So how do we solve this issue? While ignoring justices or holding impeachment elections when judges illicitly rule sound like nice ideas at first glance, I still think the best bet is a single term for justices. Lifetime tenure seemed like a nice idea when justices weren't living as long, and when less cases, both in quantity and qualitative contentiousness, were presented before the Supreme Court. The dynamic has since changed. While I know it would take cooperation in the legislative department to temper the judicial branch, it would still be worthwhile to improve upon the checks and balances system that the Founding Fathers held so dear. Single-term appointments balance the concept of immunity from political forces and still provides a steady stream of justices so that things don't get stale or entrenched at the Supreme Court. While the approval rating is not as bad as that of Congress, I think that term limits would both unequivocally restore the integrity in the Court, as well as improve upon what has been a declining trend in approval of the Supreme Court.

When the United States Supreme Court first started, the idea was to shield judges from political influence. Keep in mind this was when the Supreme Court had less power, and the life expectancy was nearly half what it is now. But perhaps being shielded from populist whims is a good idea, especially with an election where Trump and Sanders are two major presidential candidates who hope to shove their version of populism down the throats of the American people if elected. Perhaps making confirmation hearings more frequent would only make things more politically contentious than they already are.

Even if the level of contentiousness does not decrease, one could argue that term limits would lead to greater dynamism. A lack of turnover usually means deadened thinking, and the justices stay mentally sequestered in their own form of an ivory tower (although to be fair, even with an increasing amount of 5-4 rulings, less than 25 percent of Supreme Court rulings are 5-4 rulings). The other problem with such isolation is that justices can and do evolve into something that you didn't intend upon nomination. Justices John Paul Stevens, Anthony Kennedy, and John Roberts come to mind.

Justices are meant to enforce the law, strike down unconstitutional legislation, and guarantee constitutionally protected rights. When justices write laws from the bench, much like was done with the Obamacare cases of National Federation of Independent Businesses v. Sebelius (2012) and King v. Burwell (2015). If power corrupts and absolute power corrupts absolutely, imagine what lifetime tenure does. And let's not forget other issues, such as intellectual autopilot, decrepitude, diminished productivity, and eroded legitimacy by what has become a quasi-monarchical position with less accountability over time. As was asked so succinctly by Nobel Prize-winning economist Gary Becker: "Do we really want eighty-year olds, who have been removed from active involvement in other work or activities for decades, and who receive enormous deference, in large measure because of their great power, to be greatly influencing some of the most crucial social, economic, and political issues?" Not really, no.

So how do we solve this issue? While ignoring justices or holding impeachment elections when judges illicitly rule sound like nice ideas at first glance, I still think the best bet is a single term for justices. Lifetime tenure seemed like a nice idea when justices weren't living as long, and when less cases, both in quantity and qualitative contentiousness, were presented before the Supreme Court. The dynamic has since changed. While I know it would take cooperation in the legislative department to temper the judicial branch, it would still be worthwhile to improve upon the checks and balances system that the Founding Fathers held so dear. Single-term appointments balance the concept of immunity from political forces and still provides a steady stream of justices so that things don't get stale or entrenched at the Supreme Court. While the approval rating is not as bad as that of Congress, I think that term limits would both unequivocally restore the integrity in the Court, as well as improve upon what has been a declining trend in approval of the Supreme Court.

Subscribe to:

Comments (Atom)